It usually starts small. A scanner flags a critical vulnerability, and a patch deadline is set. The ticket gets created but sits behind feature work. A week later, the SLA clock ran out, and the vulnerability was still in production. The compliance team is asking for an update, and leadership wants to know how long the system has been exposed. The issue isn’t that you didn’t see the problem; the resolution stalled because ownership, priority, or process broke down.

More than 33,000 new vulnerabilities (CVEs) were disclosed, each demanding attention, assessment, and action. Yet only 12% of those marked "Critical" scores genuinely warranted that urgency in real-world contexts.

This guide gives you eight immediate, battle-tested playbook actions to stabilize the response, regain control, and redesign your remediation process so breaches become brief incidents, not recurring failures.

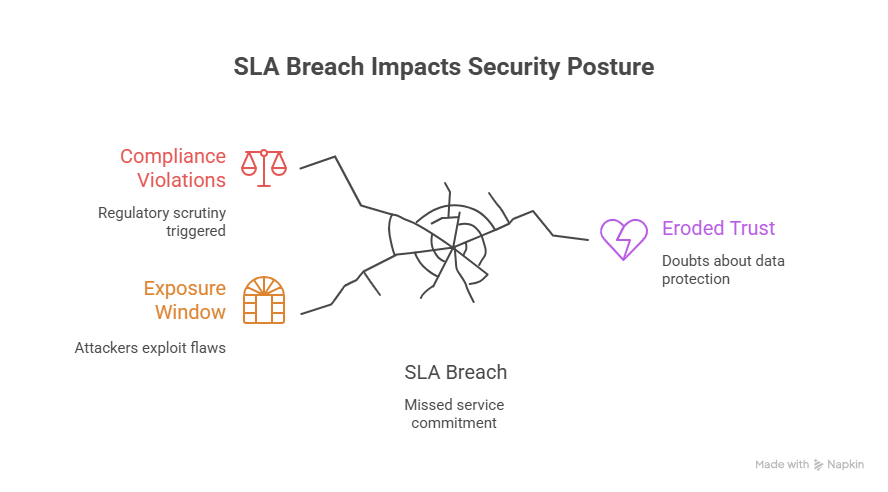

Service Level Breach Impacts Security Posture

What Is an SLA Breach and Why Is It So Risky

An SLA breach happens when a team fails to meet a commitment defined in its Service Level Agreement, such as patching a vulnerability, escalating an incident, or resolving critical alerts within the required timeframe. Beyond a missed deadline, it represents a breakdown in risk management and reliability.

The consequences of an SLA breach extend far beyond contracts. Each missed patch window increases exposure and regulatory risk, but continuous security approaches like penetration testing as a service (PTaaS) help identify vulnerabilities earlier and reduce SLA violations.

Perhaps most damaging, SLA breaches erode customer and stakeholder trust, since failing to meet deadlines raises doubts about your ability to protect data and deliver reliably.

Common Scenarios That Lead to an SLA Breach

SLA breaches rarely happen in a vacuum. They’re usually the byproduct of everyday friction inside security and engineering teams. A few of the most common triggers include:

- Missed patch deadlines. A critical vulnerability is logged, the patch is ready, but it sits behind feature work or testing bottlenecks. By the time someone circles back, the SLA window is closed.

- Unescalated incidents. Alerts trigger at the right time, but no one escalates within the time requirement because the signal gets lost in the noise, or the on-call engineer is juggling too much.

- Tickets that rot. Issues are opened in Jira or ServiceNow, then quietly roll from sprint to sprint without closure. They’re technically “tracked” but functionally abandoned.

- Broken handoffs. Security thinks engineering owns the fix, engineering assumes security will follow up, and the vulnerability is stuck between teams. None of these scenarios is unusual, and that’s the point. Service Level Agreement breaches often grow out of small, everyday misses, the exact fragmentation that unified vulnerability management (UVM) is designed to address.

In security operations, an Service Level Agreement breach signals that something is broken in your workflows: ownership confusion, tool sprawl, or stalled communication. When this kind of deadline slip occurs, time is critical. Here’s what to do immediately.

Understanding Service Level Agreement Breach Scope

8 Immediate Actions to Take After a Service Level Agreement Breach

When a Service Level Agreement breach occurs, the difference often comes down to how quickly and systematically the team responds. Without a plan, the disruption drags on; with clear steps, the issue can be contained and the risk of repeat failures reduced.

The following eight actions are designed to guide you through the critical hours after a breach. Each is practical, high-trust, and based on what experienced engineering and security teams do when deadlines slip. Many of these patterns reflect broader vulnerability remediation challenges that teams face when trying to keep pace with patching and deadlines.

1. Confirm the Scope of the Breach

The first step after a Service Level Agreement breach is understanding precisely what went wrong. Was it a single vulnerability that slipped past its patch deadline, or are multiple assets and teams affected?

Confirming the scope starts with figuring out what is actually at risk. Is it one system or many? A handful of users or an entire service? Sometimes the breach is minor and contained, and the issue can be resolved quickly with targeted work. Other times, it points to something bigger, like a backlog that has quietly grown out of control or tooling that isn’t catching what it should. If you skip this step, the rest of the response will be guesswork. Take notes as you go, because the details you capture now will be essential when you dig into the root cause later.

2. Alert the Right Internal Stakeholders

When a Service Level Agreement breach is confirmed, notify engineering leads, application security, incident response, and compliance stakeholders. Give people the facts up front, such as what was missed, when it happened, and why it matters. Skip the blame or lengthy explanations, because they only slow things down. The point is to align the right people quickly so they can act. If communication drags, the exposure window gets bigger, and the eventual fix looks messy and uncoordinated. Clear stakeholder alerts keep leadership and ownership accountable.

Clear ownership for breach response

3. Assign Clear Ownership for Remediation

Breach response slows down quickly when no one knows who’s responsible. Ownership might sit with an engineer, a platform team, or the service owner but it has to be clear from the start. Use whatever breadcrumbs you have, such as metadata, asset tags, and repo history, to track down responsibility fast. When ownership is fuzzy, tickets sit untouched, and the Service Level Agreement breach worsens. Clear accountability allows tracking progress, escalating blockers, and confirming when the issue is resolved.

Ownership turns a breach from a shared problem into a managed task with a clear point of accountability. This step should be visible to all stakeholders, so there is no doubt about who is driving the remediation.

4. Re-Prioritize the Breached Issue(s)

Once ownership is clear, escalate the breached item to the top of the queue and reassess risk immediately based on exploitability, asset value, and exposure. A missed patch on a production database is far riskier than one on an internal test server. Risk-based reprioritization prevents teams from treating SLA breaches as “just another ticket” and ensures they get the attention they demand. It is also a moment to check if other related issues should be escalated to avoid compounding exposure.

5. Launch a Timeboxed Remediation Sprint

An Service Level Agreement breach has already extended your exposure window. The fix cannot drift further. Launch a timeboxed remediation sprint (24–72 hours), make it visible across teams, track progress in ticketing systems, and validate fixes with monitoring and code coverage tools. The goal is to compress time to remediation and bring focus to a critical issue that cannot wait for the normal backlog cycle. A timeboxed sprint shifts the response from open-ended effort to a managed countdown.

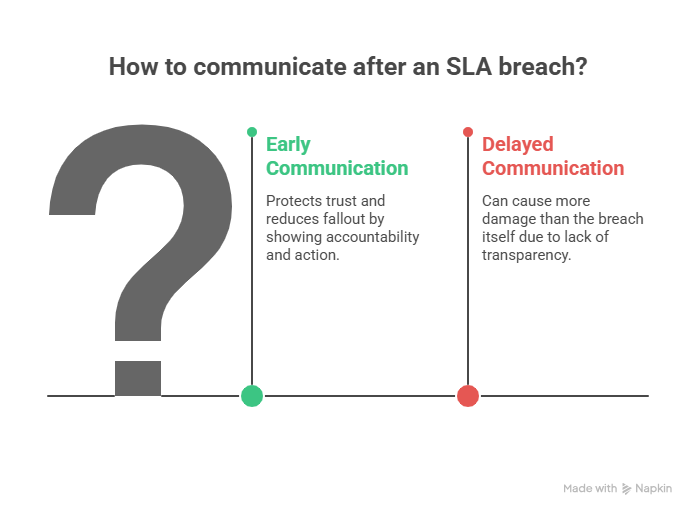

How to communicate after an SLA breach

6. Communicate Upstream (and Possibly Externally)

If an Service Level Agreement breach affects customers, regulators, or contracts, communicate early to show accountability and outline actions rather than excuses. In many organizations, this responsibility falls to a virtual CISO (vCISO), a role designed to bridge security strategy with business leadership and external stakeholders.

Clear, proactive communication protects trust and can reduce fallout even when the Service Level Agreement has been breached. Waiting to explain after the fact often does more damage than the breach itself. Create a communication template in advance so messaging is fast, accurate, and consistent in pressure moments.

7. Review Why the SLA Was Missed

After containing the issue, conduct a root cause analysis to determine whether the Service Level Agreement breach stemmed from tooling gaps, unclear ownership, or backlog neglect. Every Service Level Agreement breach is a signal that something in your system broke down. Reviewing the “why” prevents the same mistake from happening again and turns an operational failure into an opportunity to strengthen workflows. Do this review quickly while the details are fresh, but capture insights for a broader postmortem later.

8. Reinforce or Redesign Your Remediation Workflows

The final step is to make structural changes so this Service Level Agreement breach does not repeat. That may mean adding visibility into ticket lifecycles, improving ownership mapping, or automating handoffs between security and engineering. Sometimes, a more fundamental redesign of how remediation is tracked and validated is required.

Treat this moment as a chance to reinforce what works and redesign what does not. Drawing on vulnerability management best practices can help teams tighten workflows and avoid the same gaps that cause Service Level Agreement deadlines to slip. Modern platforms, like DevOcean, exist to prevent exactly these lapses by automating ownership, prioritization, and closure tracking. Use the breach as a catalyst to build stronger, more resilient remediation processes, rather than just patching over the immediate fire.

Turning Missed Deadlines Into Stronger Systems

A Service Level Agreement breach is more than a late ticket. It is an operational signal that something in your workflows failed, such as ownership, prioritization, or accountability. Treating it only as a one-off miss means the same problem will return in the next sprint or audit. The more practical move is to use every breach as a chance to strengthen the system.

Now’s the time to take a hard look at your remediation workflows. Do they give you the visibility to see what’s slipping, the automation to keep things moving, and the accountability to know who owns what?

DevOcean helps teams prevent Service Level Agreement lapses by clarifying ownership, driving fixes to completion, and turning SLA management into a source of resilience rather than risk. Book a demo today.

The true cost of poor security remediation.

Goes beyond wasted resources, overspent budgets, and missed SLAs.

Stay ahead of breaches - get started with DevOcean.

.png)

%20Breach.png)

.png)